Set Up

In the summer of 2024 I got an internship at a company named anvaya solutions working on adversarial machine learning – the cyber security of machine learning models. I took interest in defending/attacking these ML models as well, for this blog post I will try to answer this question: Does the number of possible responses the Neural Network can produce impact the number of poisoned samples required to incorrectly bias those responses.

Experiment

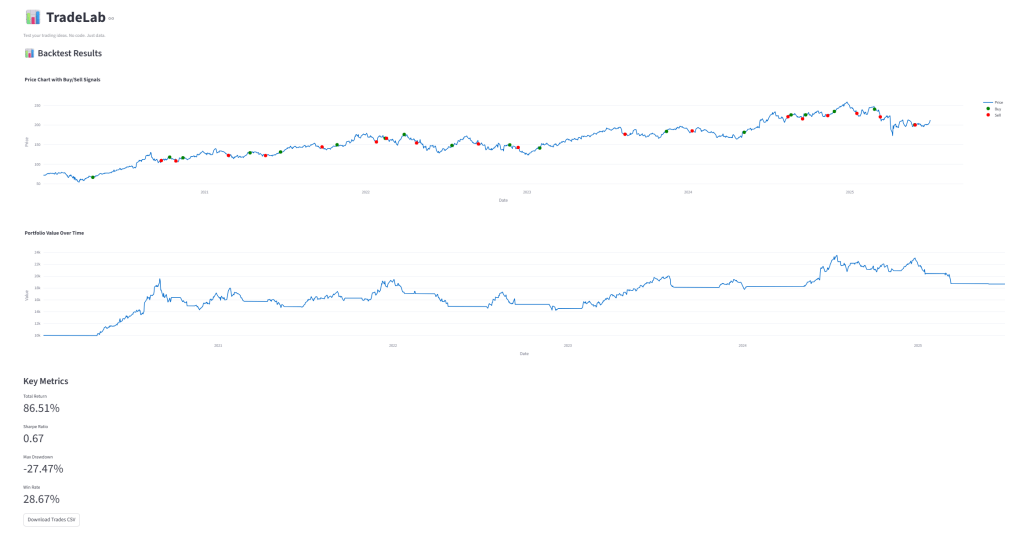

To answer the first question, I collected results from five neural networks that I trained. The number of output classes for each network came from the set (2, 4, 8, 16, 64). The code for all the neural networks are appended. I performed poisoning attacks in which different fractions of the training data were intentionally mislabeled. The assembly is documented in the dataset accompanying the experiments.

The figure below illustrates the accuracy of each model as a function of the percentage of poisoned data in its training set. A clear trend emerges: models with a larger number of output classes degrade more rapidly when subjected to data poisoning. In particular, while the binary-class model required nearly 46% of its data to be poisoned before accuracy fell below 80%, the 32-class model required only ~27% poisoning to reach the same threshold.

To quantify this relationship, I fit each model’s accuracy curve to a four-parameter logistic function. This approach allowed me to estimate the rate of change in accuracy with respect to poisoned data, giving a clearer picture of how vulnerability scales with model complexity. The logistic fit highlights that as the number of outputs increases, the slope of the degradation becomes steeper.

Theoretical Explanation

The results I got in the experiment can be explained by decision boundaries the NN encounters, as well as error propagation in the NN’s classificational nature.

- Decision Boundary Complexity

- A model with more classes must learn more intricate decision boundaries in the feature space. Each additional class increases the number of boundaries that need to be drawn between different regions. Poisoned data points can shift these boundaries disproportionately, especially in regions where classes overlap.

- A model with more classes must learn more intricate decision boundaries in the feature space. Each additional class increases the number of boundaries that need to be drawn between different regions. Poisoned data points can shift these boundaries disproportionately, especially in regions where classes overlap.

- Error Propagation in Multi-Class Settings

- In binary classification, poisoned data can only mislead the model in one direction. But in multi-class classification, poisoned samples can scatter across many possible incorrect labels, compounding the impact of relatively few corrupted examples.

- In binary classification, poisoned data can only mislead the model in one direction. But in multi-class classification, poisoned samples can scatter across many possible incorrect labels, compounding the impact of relatively few corrupted examples.

Conclusions

| Model Input options | % poisoned data until 80% accuracy |

| 2 | 45.9 |

| 8 | 39.4 |

| 16 | 39.0 |

| 32 | 26.6 |

| 64 | 34.3 |

From these experiments, we can conclude that neural networks with a greater number of output classes are more vulnerable to data poisoning. While the 64-class model showed a slightly higher tolerance compared to the 32-class model (an outlier in the dataset), the overall trend is clear: as classification complexity grows, the amount of poisoned data required to significantly degrade performance decreases.

This suggests that adversarial robustness in multi-class systems cannot be assumed to scale smoothly with model size. Instead, special care must be taken to ensure training data integrity, particularly in domains such as natural language processing or image recognition where the number of possible labels is large.

One major flaw in my own experiment is the range of models tested, run repeated trials to mitigate outliers, and compare different poisoning strategies (e.g., targeted vs. random label flips). Unfortunately with my limited resources I was unable to accomplish this on my own as it would take far too long for my lone computer to accomplish all of this. A larger dataset and greater computational resources would provide more statistically significant results and may reveal nonlinearities in the relationship between output size and vulnerability.

Appendix

All files/links can be found in this google drive –Effects of poisoning attacks on deep learning models

Leave a comment